Data Engineering Fundamentals – Building Effective and Scalable Solutions

Introduction

In the era of digital transformation, data has become one of the most valuable resources for businesses. Organizations have vast amounts of information at their disposal, but without the right tools and approaches to process it, this data remains unstructured and unusable. This is where data engineering comes in – a key discipline that allows companies to extract value from information, improve their operations, and make more informed decisions.

Companies today generate and process vast amounts of data – from customer transactions and manufacturing processes to marketing campaigns and consumer behavior analysis. Data engineering provides the infrastructure and processes needed to collect, store, and process this data.

Among the main applications of data engineering in business are:

- Optimization of operations – Through data analysis, companies can improve their production processes, automate tasks, and reduce costs.

- Better customer service – Data allows companies to personalize their services, anticipate customer needs, and offer a better user experience.

- Improved decision-making – Access to well-structured and analyzed data helps managers make informed strategic choices.

- Automation and predictive analytics – The use of algorithms and machine learning allows for trend prediction and automatic optimization of business processes.

In order for data engineering to be effectively implemented, it is necessary to use a variety of technologies and methodologies that ensure efficiency, accuracy, and security in information processing.

In this article, we will focus on the key aspects of data engineering required to create robust and effective information processing and management solutions. These include:

- SQL – the database language: We will look at how SQL is used to retrieve and manipulate information in relational databases, as well as query optimization strategies.

- Python for Data Engineers: One of the most widely used programming languages for data processing and process automation. We will focus on its libraries and applications in ETL (Extract, Transform, Load) processes.

- Apache Airflow – workflow management: We will discuss how this tool facilitates the orchestration of complex data processing processes and how it can be implemented in an enterprise environment.

- Data Warehouses: We will introduce the concept of data warehouses, their importance to business, and how they can be built effectively.

- Data Governance: We will examine good practices for ensuring the quality, security, and accessibility of information in an organization.

Data engineering is the foundation on which businesses build their analytical and strategic solutions. In the following sections, we will go into detail about each of these key technologies and show how they can be applied to achieve better business results.

1. Data Engineering Fundamentals

In the era of digitalization, organizations have vast volumes of data at their disposal, but their value depends on the ability to structure, process, and analyze it effectively. This is where data engineering comes in – a discipline that provides the necessary infrastructure and processes for data management.

What Is Data Engineering and Why Is It Critical for Business?

Data Engineering is a field that focuses on building and maintaining systems for collecting, storing, and processing information. It provides the foundation upon which data analysts, machine learning specialists, and business leaders work, providing them with accurate and well-structured data.

Why is data engineering critical?

- Provides reliable infrastructure – Companies rely on robust and scalable data processing systems to support fast and efficient business operations.

- Improves data quality – Well-built processes ensure the purity, accuracy and consistency of information, reducing the risk of errors in analysis.

- Facilitates automation and integration – Data engineering allows for the integration of different sources of information and automation of routine processes.

- Supports strategic decision-making – Companies that use well-structured data can respond faster to market changes and build more successful strategies.

The Difference Between Data Analytics and Data Engineering

Although often used together, data engineering and data analytics serve different roles:

|

Characteristics |

Data Engineering |

Data Analytics |

|---|---|---|

|

Target |

Building an infrastructure for data management and processing. |

Extracting useful insights from already processed data. |

|

Focus |

Data collection, storage, transformation and management. |

Analyzing, visualizing and interpreting data. |

|

Tools |

SQL, Python, Apache Airflow, Hadoop, ETL platforms. |

Tableau, Power BI, Python, Excel, statistical models. |

|

Role in business |

Ensures that data is accurate, available, and well-structured. |

Supports informed decision-making through trend analysis and forecasts. |

In its simplest form, data engineering creates the “highways” along which data flows, and data analytics uses these “highways” to extract useful business insights.

Basic Principles in Building Scalable and Efficient Data Processing Systems

To be effective, data engineering must follow several basic principles:

- Scalability – Systems must be designed to handle increasing volumes of data without loss of performance. This includes using distributed computing and cloud-based solutions.

- Reliability – Engineering processes must ensure data accuracy and completeness using validation and quality monitoring techniques.

- Automation – Building automated ETL (Extract, Transform, Load) processes minimizes manual operations and reduces the risk of human errors.

- Performance Optimization – Fast access to data is essential, so indexing, caching, and information compression are used.

- Security & Access Management – Data must be protected through mechanisms such as encryption, role-based access control (RBAC), and regulatory compliance.

- Flexibility & Integration – Systems must be able to easily connect to different platforms and technologies, allowing integration between databases, cloud solutions, and BI tools.

An example of successful application of these principles is the construction of a centralized cloud-based data infrastructure that provides real-time access to reliable information for strategic decision-making.

Data engineering is a fundamental element of the digital transformation of businesses. It provides the infrastructure that enables the analytics, predictions, and automation needed for strategic development of companies. In the next section, we will look at how SQL is used as a primary tool for managing and processing data in an enterprise environment.

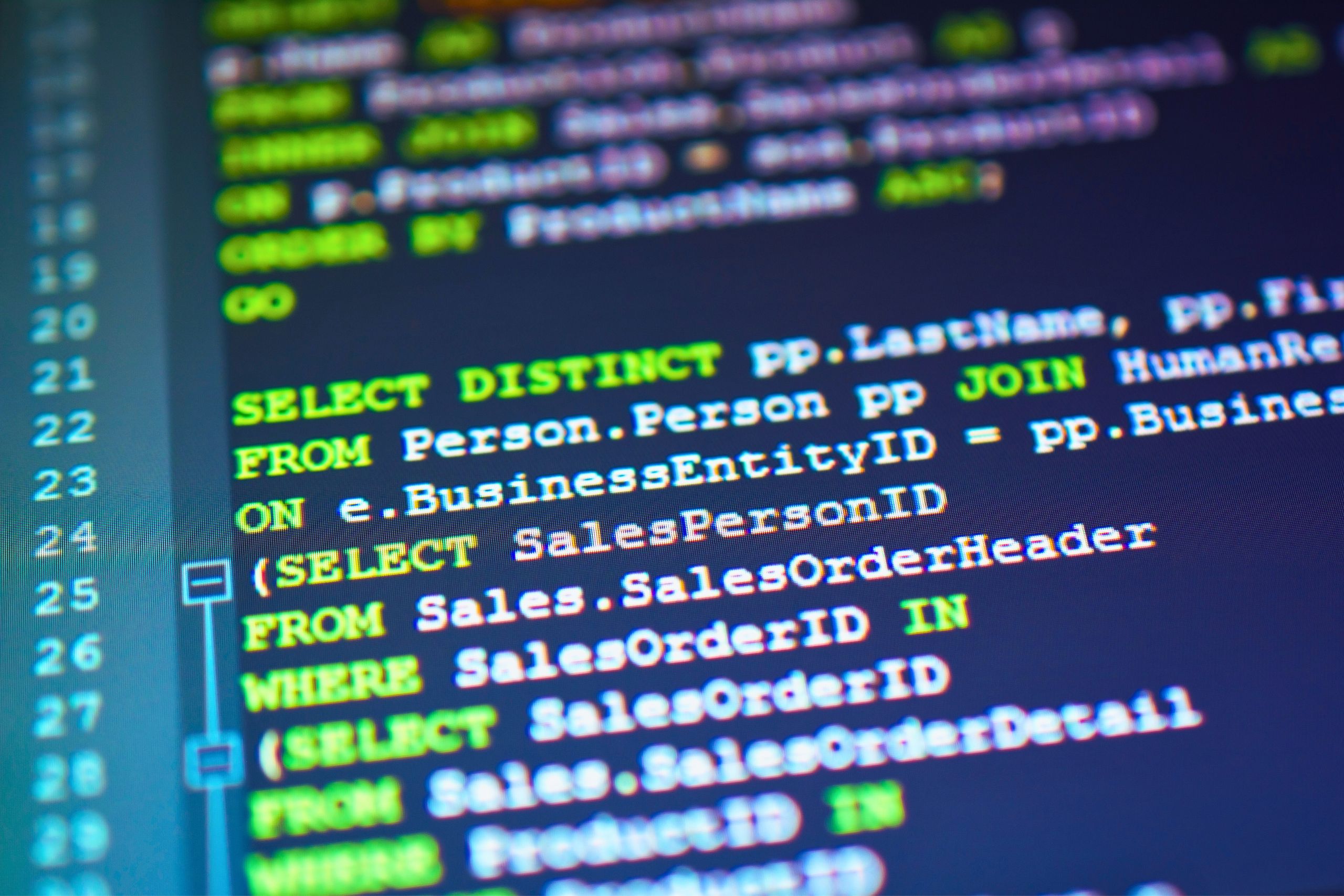

2. Working with SQL – Basic Concepts and Best Practices

SQL (Structured Query Language) is a fundamental tool for managing and manipulating data in relational databases. In data engineering, it plays a critical role by enabling the efficient storage, retrieval, transformation, and analysis of large amounts of information. Companies rely on SQL to manage their business processes, analyze customer behavior, and optimize operational efficiency.

How SQL Is Used to Retrieve and Manipulate Data in Relational Databases

Relational database management systems (RDBMS) such as MySQL, PostgreSQL, Microsoft SQL Server, and Oracle are widely used to store business information. SQL is the language that allows interaction with these systems through queries to create, retrieve, update, and delete data.

The main functionalities of SQL include:

- Information extraction – Using SELECT to retrieve specific data from the database.

- Filter results – Application of WHERE, HAVING and LIMIT to limit the returned records.

- Data grouping – Using GROUP BY and aggregate functions such as COUNT(), SUM(), AVG().

- Linking tables – Combining information from different sources using JOIN operations.

- Data manipulation – Using INSERT, UPDATE, DELETE to change information in the database.

In everyday business practice, SQL is used for everything from generating financial reports to analyzing customer behavior and forecasting sales.

Important SQL Queries: Selecting, Filtering, Grouping, and Merging Data

- Data extraction (SELECT)

SELECT first_name, last_name, email FROM customers;- This query returns a list of customers and their emails from the customers table.

- Data filtering (WHERE)

SELECT * FROM orders WHERE order_date >= '2024-01-01';- All orders placed after the beginning of 2024 are retrieved here.

- Grouping information (GROUP BY)

SELECT product_id, COUNT(*) AS total_sales FROM orders GROUP BY product_id HAVING COUNT(*) > 10;- This query displays the number of orders for each product, leaving only those with more than 10 sales.

- Joining tables (JOIN)

SELECT customers.first_name, customers.last_name, orders.order_id FROM customers INNER JOIN orders ON customers.customer_id = orders.customer_id;- Through JOIN operators, customers and their orders are combined to obtain more complete information about the purchases made.

SQL Query Optimization to Improve Performance

As the volume of data increases, the efficiency of SQL queries becomes critical. Slow queries can slow down processes and put a strain on servers. The following techniques are used to improve performance:

- Indexing (INDEX) – Creating indexes on frequently used columns speeds up the search for records.

CREATE INDEX idx_customer_email ON customers(email);- Limiting returned data – Using LIMIT on large data sets reduces execution time.

SELECT * FROM transactions ORDER BY transaction_date DESC LIMIT 100;- Avoiding unnecessary SELECT * requests – Retrieving only the necessary columns instead of all of them reduces the load on the system.

- Using EXPLAIN for query analysis – Allows understanding of how a database executes queries and identification of potential problems.

EXPLAIN SELECT * FROM orders WHERE customer_id = 123;- Optimization through partition tables (Partitioning) – Dividing large tables into logically smaller parts, which improves access speed.

Example of Working with SQL in A Business Environment – Customer and Transaction Analysis

Let's consider a scenario in which an e-commerce store manager wants to analyze customer behavior and their purchases.

Scenario:

The company wants to find out which customers have been most active over the last month and what their average order size is.

SQL query for analysis:

SELECT customers.customer_id, customers.first_name, customers.last_name, COUNT(orders.order_id) AS total_orders, AVG(orders.total_amount) AS avg_order_value FROM customers LEFT JOIN orders ON customers.customer_id = orders.customer_id WHERE orders.order_date >= '2024-01-01' GROUP BY customers.customer_id, customers.first_name, customers.last_name ORDER BY total_orders DESC;Result:

- total_orders shows the number of orders placed by each customer.

- avg_order_value calculates the average value of orders.

- The results are sorted so that the most active customers are at the top.

This type of analysis allows businesses to identify key customers, personalize their offers, and improve their marketing strategies.

SQL is a fundamental data engineering tool that enables efficient management and processing of information in relational databases. Whether it is selecting, filtering, grouping, or linking data, the use of optimized SQL queries is crucial to achieving high performance.

In the next part, we will look at how Python complements data engineering by providing powerful tools for automation and information processing.

3. Python for Data Engineers – Automation and Information Processing

Python is one of the most popular programming languages in the field of data engineering. Its flexibility, powerful libraries, and easy syntax make it a preferred tool for processing, transforming, and analyzing large volumes of information. In modern business, Python is used to automate routine tasks, optimize ETL (Extract, Transform, Load) processes, and integrate various data management systems.

How Python Is Used for Data Processing, Transformation, and Analysis

In data engineering, information processing is a critical process that includes:

- Extracting data from various sources – Python allows connection to relational databases (SQL), NoSQL repositories, APIs, and file systems (CSV, JSON, XML).

- Data transformation and cleansing – Much of the work of data engineers is related to removing duplicate records, handling missing values, and converting data into a unified format.

- Analysis and visualization – Python can be used to identify trends, model dependencies, and present data in graphical form.

An example of processing a CSV file with Python:

import pandas as pd # Loading data from a CSV file df = pd.read_csv("sales_data.csv") # Removing missing values df.dropna(inplace=True) # Converting the date column to the correct format df['date'] = pd.to_datetime(df['date']) # Filtering sales for the last month recent_sales = df[df['date'] >= "2024-01-01"] print(recent_sales.head())This code automatically processes, cleans, and structures the data, ready for analysis or loading into a database.

Essential Libraries for Data Engineering: Pandas, NumPy, and SQLAlchemy

Python has many libraries that make data processing easier. The most important ones include:

- Pandas – A basic library for working with tabular data, which allows reading, transformation, and analysis.

- NumPy – A powerful tool for working with multidimensional arrays and mathematical operations, used for quick calculations.

- SQLAlchemy – A library for working with relational databases using Python, which allows creating, modifying, and retrieving information from SQL databases.

Example of using SQLAlchemy to access a database:

from sqlalchemy import create_engine

import pandas as pd

# Connecting to a database

engine = create_engine("sqlite:///company.db")

# Retrieving data with an SQL query

query = "SELECT * FROM employees WHERE department = 'Sales'"

df = pd.read_sql(query, engine)

print(df.head())This flexibility allows data engineers to quickly integrate different sources of information and process them efficiently.

Automating ETL (Extract, Transform, Load) Processes with Python

ETL (Extract, Transform, Load) processes are the foundation of data engineering. Python is used to automate these steps by extracting data from various sources, transforming it into an appropriate format, and loading it into a database or repository.

An example of an ETL process with Python:

import pandas as pd

from sqlalchemy import create_engine

# Extract data from CSV file

df = pd.read_csv("customer_data.csv")

# Transformation: correct column names and format data

df.rename(columns={"CustomerID": "customer_id", "AmountSpent": "amount_spent"}, inplace=True)

df["amount_spent"] = df["amount_spent"].astype(float)

# Load into database

engine = create_engine("sqlite:///sales.db")

df.to_sql("customers", con=engine, if_exists="replace", index=False)

print("ETL process completed successfully.")This automation reduces the need for manual processing and ensures reliable data transformation with minimal risk of errors.

Example of Automated Data Processing Using Python Scripts

Let's consider a real-world scenario in which an online retail company wants to analyze its sales on a daily basis and identify the best-selling products.

Scenario:

- The data source is a MySQL database with orders.

- It is necessary to automatically extract the data, process it and save the summarized results in a new table.

Python solution:

import pandas as pd from sqlalchemy import create_engine # Connect to MySQL database engine = create_engine("mysql+pymysql://user:password@localhost/sales_db") # Retrieve recent orders query = "SELECT product_id, COUNT(*) AS total_sales FROM orders WHERE order_date >= CURDATE() - INTERVAL 30 DAY GROUP BY product_id" df = pd.read_sql(query, engine) # Load processed data into a new table df.to_sql("monthly_sales_summary", con=engine, if_exists="replace", index=False) print("The sales summary data has been successfully updated.")This script automatically collects, processes, and records information, providing up-to-date data for analysis by managers and sales teams.

Python is an indispensable tool for data engineers, providing powerful capabilities for processing, transforming, and analyzing information. Thanks to a rich ecosystem of libraries like Pandas, NumPy, and SQLAlchemy, it makes it easy to automate ETL processes and integrate different data sources.

In the next part, we will look at Apache Airflow – a powerful workflow orchestration tool that allows for the automation of complex ETL processes and efficient data flow management.

4. Apache Airflow – Workflow Orchestration

In today's world of data engineering, effective workflow management and automation is essential. Apache Airflow is one of the most powerful tools for orchestrating ETL (Extract, Transform, Load) processes, providing the ability to plan, execute, and monitor complex data processing tasks.

What Is Apache Airflow and Why Is It a Key Tool for Data Engineers?

Apache Airflow is an open source platform for creating, managing, and automating workflows. Developed by Airbnb, it is widely used by companies around the world to coordinate ETL processes, data integration, and manage large-scale computing tasks.

Why do data engineers rely on Apache Airflow?

- Flexibility – Supports working with various data sources and services, such as databases, cloud platforms, and APIs.

- Automation – Reduces manual work by scheduling and executing workflows at precisely specified times.

- Scalability – Suitable for both small and large organizations with millions of transactions daily.

- Traceability and control – Provides the ability to monitor and debug at every stage of data processing.

Airflow allows data engineers to define and manage ETL processes through intuitive Python scripts, instead of through complex configuration files.

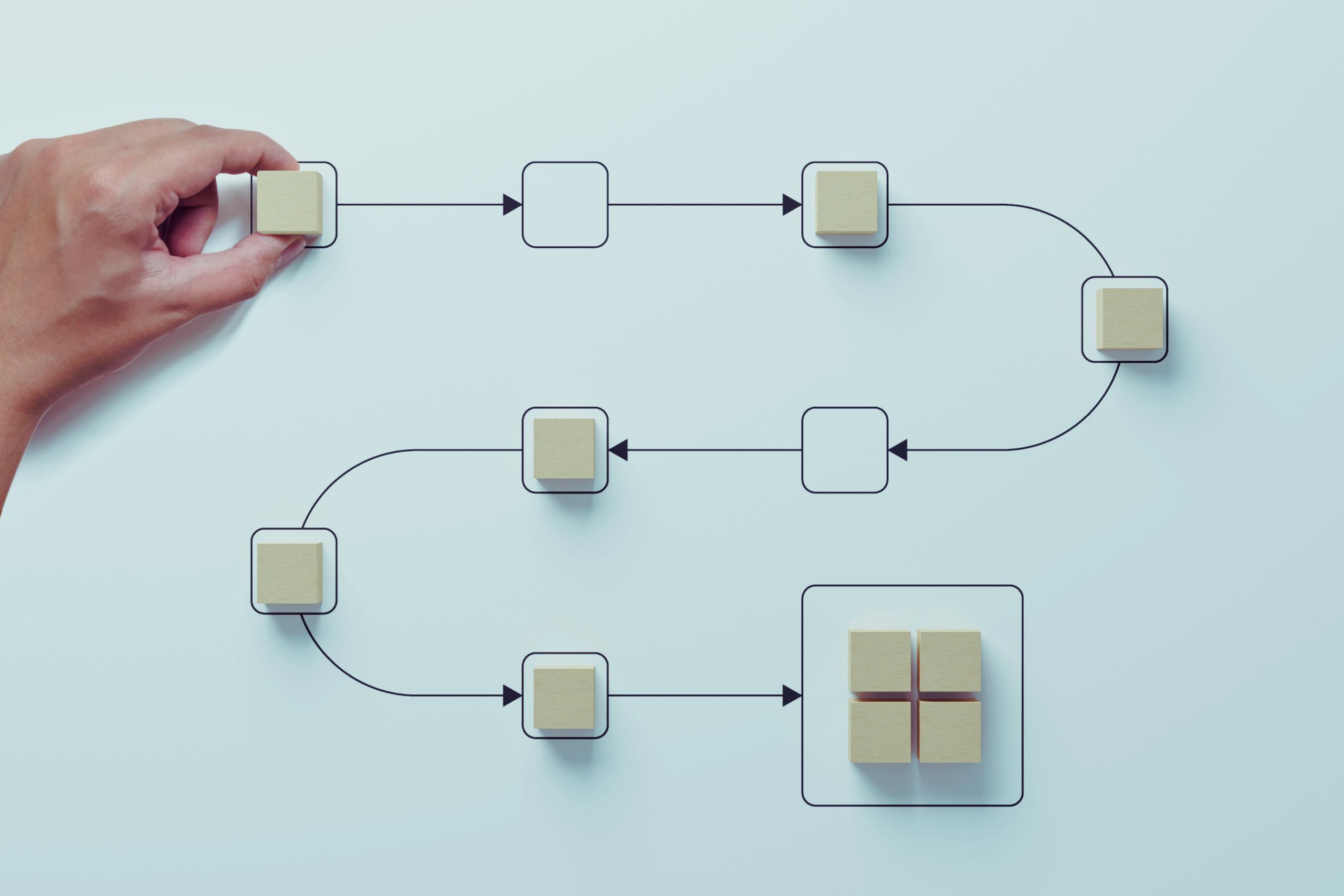

Basic Concepts: DAGs (Directed Acyclic Graphs), Operators, Tasks and Dependencies

Apache Airflow uses the concept of DAG (Directed Acyclic Graph) – a structured workflow consisting of individual tasks that are performed in a predefined order.

Key elements of Apache Airflow:

- DAG (Directed Acyclic Graph) – Represents the entire workflow as a dependency between tasks. Each task is executed sequentially or in parallel according to the specified dependencies.

- Operators – The actions that need to be performed within the DAG. For example, extracting data from a database, transforming files, or sending the results to another system.

- Tasks – Specific operations within a DAG that can be performed independently or in conjunction with other tasks.

- Dependencies – They determine the order in which tasks are performed, ensuring the proper flow of the process.

Example of a DAG with three tasks:

[Extract data] → [Transform data] → [Load results into database]This provides a clear and predictable workflow, minimizing the risk of errors in data processing.

How Airflow Automates ETL Processes and Improves Data Efficiency

Apache Airflow significantly improves ETL processes by providing:

- Planning and implementation – Airflow allows scheduling tasks at predefined intervals (for example, daily extraction of financial reports).

- Error management – If a task fails, the system can automatically restart it or send a notification about the problem.

- Integration with other technologies – Works with tools such as AWS, Google Cloud, PostgreSQL, Spark, and other data processing platforms.

- Visualization and monitoring – Airflow's graphical interface provides real-time information about the status of tasks and the ability to intervene when needed.

Example scenario:

A company uses Apache Airflow to automate daily loading of customer data from a cloud CRM system into a database, after the information has gone through transformation and validation processes.

Example of Building a Workflow with Apache Airflow

The following code shows how an ETL process can be created in Apache Airflow that automatically extracts data, transforms it, and writes it to a database:

from airflow import DAG from airflow.operators.python import PythonOperator from datetime import datetime import pandas as pd import sqlite3 # Extract, transform and load functions def extract_data(): data = {"customer_id": [1, 2, 3], "purchase": [100, 200, 150]} df = pd.DataFrame(data) df.to_csv("/tmp/data.csv", index=False) def transform_data(): df = pd.read_csv("/tmp/data.csv") df["purchase"] = df["purchase"] * 1.2 # Add 20% VAT df.to_csv("/tmp/transformed_data.csv", index=False) def load_data(): df = pd.read_csv("/tmp/transformed_data.csv") conn = sqlite3.connect("/tmp/sales.db") df.to_sql("sales", conn, if_exists="replace", index=False) conn.close() # Define DAG default_args = { "start_date": datetime(2024, 1, 1), "catchup": False, } dag = DAG("etl_process", default_args=default_args, schedule_interval="@daily") # Defining tasks extract_task = PythonOperator(task_id="extract", python_callable=extract_data, dag=dag) transform_task = PythonOperator(task_id="transform", python_callable=transform_data, dag=dag) load_task = PythonOperator(task_id="load", python_callable=load_data, dag=dag) # Defining dependencies between tasks extract_task >> transform_task >> load_taskCode explanation:

- Data extraction – A sample CSV file with customer values is generated.

- Transformation – 20% VAT is added to all purchases.

- Loading – Processed data is saved in a SQLite database.

- Process organization – DAG defines the sequence of tasks and schedules them for automatic execution every day.

Apache Airflow is a powerful tool that facilitates the automation of complex ETL processes and provides flexibility in workflow management. Thanks to its concepts of DAGs, operators, and tasks, it enables reliable planning and execution of data processing processes.

In the next part, we will look at the concept of Data Warehouses and how they are used to store and analyze large amounts of information.

5. Data Warehouses – Building Scalable Solutions

Data warehouses are specialized systems for storing and analyzing large volumes of structured data. They are used in organizations to integrate data from various sources, facilitate business analysis, and support strategic decision-making. Compared to traditional databases, data warehouses are optimized for rapid information retrieval, long-term storage, and complex analytical processes.

What Is a Data Warehouse and How Is It Different from Regular Databases?

While relational databases (RDBMS) such as MySQL, PostgreSQL, and SQL Server are used for operational tasks (such as storing current transactions and user data), data warehouses are designed for analysis and decision-making.

Key differences between a data warehouse and a relational database:

|

Characteristics |

Relational database (OLTP) |

Data warehouse (OLAP) |

|---|---|---|

|

Main goal |

Transaction Management |

Analysis and reporting |

|

Data volume |

Small to medium |

Large |

|

Update frequency |

Frequent |

Rarer, packaged |

|

Type of requests |

Short and frequent |

Long and complex analyses |

|

Optimization |

Quick registrations |

Fast data extraction |

Data warehouses store historical data, making them a powerful tool for uncovering long-term business trends and patterns.

Main Components and Architectures of Modern Data Warehouses

Modern data warehouses consist of several key components that ensure efficient storage, processing, and analysis of information:

- Data Sources – Information comes from various internal and external systems such as CRM, ERP, websites, IoT devices, etc.

- ETL/ELT processes (Extract, Transform, Load) – Tools like Apache Airflow, Talend, and dbt extract, transform, and load data into the warehouse.

- Data Warehouse Storage – This is where the processed data is stored. Popular solutions include Amazon Redshift, Google BigQuery, and Snowflake.

- Metadata (Metadata Management) – Defines the structure and origin of data, which facilitates its management and use.

- BI and analysis (Business Intelligence & Analytics) – Tools like Tableau, Power BI, and Looker are used to visualize and analyze stored information.

Data warehouse architectures:

- Single layer architecture – All data is stored in one place and retrieved directly. Suitable for small organizations.

- Two-tier architecture – Includes a stage for processing and cleaning the data before it is loaded into the warehouse.

- Three-layer architecture – The most common model, including:

- Operational data (Staging Layer) – Raw data prepared for transformation.

- Integration Layer (Data Warehouse Layer) – Structured and aggregated data.

- Data Mart Layer – Optimized data sets for specific business needs.

Example of Building a Data Warehouse for Business Analysis Purposes

Let's consider a scenario in which an online commerce company wants to create a data warehouse to analyze its customer purchases and improve its marketing strategies.

Steps to build the data warehouse:

- Data extraction – Data about orders, products and customers is collected from SQL database, API and CSV files.

- Transformation – Data is cleaned, duplicate records are removed, and categories are standardized.

- Loading in the warehouse – Information is recorded in Google BigQuery or Snowflake for analysis.

- Creating BI reports – Power BI and Tableau are used to visualize sales trends.

Sample SQL code for analyzing customer orders:

SELECT customer_id, COUNT(order_id) AS total_orders, SUM(order_amount) AS total_spent FROM orders WHERE order_date >= '2024-01-01' GROUP BY customer_id ORDER BY total_spent DESC LIMIT 10;This query shows a business's most valuable customers, which can help with personalized marketing campaigns.

Data warehouses play a critical role in managing and analyzing large volumes of information. They allow companies to integrate and analyze data from various sources to make informed business decisions.

In the next part, we will examine the concept of Data Governance and how organizations ensure quality, security, and compliance with regulatory requirements when working with information.

6. Data Governance – Ensuring Security and Quality

In today's business world, data is a strategic asset that can make or break an organization. Data Governance encompasses policies, processes, and technologies that ensure the quality, security, and availability of information. Effective data governance ensures that organizations can use their information reliably, comply with regulatory requirements, and minimize the risks of data loss, misinterpretation, or misuse.

What Is Data Governance and Why Is It Critical for Organizations?

Data Governance is a systematic approach to managing information within an organization. It encompasses strategies for controlling, standardizing, and securing data to ensure that it is accurate, consistent, and accessible to the right people at the right time.

Key reasons why data management is essential:

- Ensuring data quality – Inaccurate, duplicated, or incomplete data can lead to errors in analysis and decision-making.

- Compliance with regulatory requirements – GDPR, HIPAA, SOX and other regulations require strict data protection and management measures.

- Optimization of operational processes – Well-structured data management reduces processing time and improves business efficiency.

- Improving information security – Avoiding unauthorized access and potential cyberattacks by implementing data protection policies.

Example: A financial institution that stores customer data must have strict rules to control access to sensitive information to ensure its confidentiality and comply with regulatory requirements.

Basic Principles of Data Governance: Data Quality, Security and Accessibility

Data governance is based on three key principles:

1. Data Quality

Ensuring the accuracy, consistency, and timeliness of information is critical to the effectiveness of business operations. Key aspects of data quality include:

- Accuracy – The data must reflect the real situation.

- Integrity – There should be no missing key values or fields.

- Sequence – Different systems must store the same data without contradictions.

- Current affairs – Information must be updated in a timely manner to be relevant.

2. Data Security

Data security includes measures to protect against unauthorized access, loss, or manipulation. The main protection strategies include:

- Access control – Differentiation of user rights for reading, modifying and deleting data.

- Encryption – Data encryption to prevent its unauthorized use.

- Monitoring and audit – Tracking actions taken with data to identify potential violations.

Example: A banking institution may use two-factor authentication and encrypted databases to protect financial information.

3. Data Accessibility

Ensuring that information is accessible to the right users is just as important as protecting it. Key aspects here are:

- Role identification – Determining which employees should have access to specific types of data.

- Documenting metadata – Maintaining information about the structure and meaning of data.

- Automate access management – Tools for regulating rights relative to the position and responsibilities of employees.

Example: In a manufacturing company, engineers may have access to material data but not to financial statements.

Tools and Best Practices for Data Management in A Corporate Environment

Successful data management requires using the right tools and implementing good practices.

Popular Data Governance tools:

- Collibra – A data management platform providing a comprehensive Data Governance strategy.

- Informatica Data Governance – Data quality control, compliance and security solution.

- IBM InfoSphere Information Governance Catalog – A tool for cataloging and managing corporate data.

- Microsoft Pureview – Cloud platform for metadata management and security.

Good practices in data management include:

- Creating Data Governance Policies – Every organization must have defined rules for data processing and storage.

- Personnel training – Employees must understand the importance of data protection and follow established standards.

- Regular audit and control – Organizations should periodically analyze data quality and security.

- Using automated tools – Data monitoring and control tools significantly reduce human errors and increase efficiency.

Example: A large technology company implements a data governance policy that includes regular information quality checks and automated access control mechanisms.

Data management is a critical process for any modern organization that wants to ensure the quality, security, and effective use of its information. By implementing good practices, using the right tools, and building a clear strategy, companies can minimize risks and extract maximum value from their data.

In the next part, we will summarize all the aspects of data engineering discussed and highlight the key conclusions for its successful implementation in business.

7. Practical Steps for A Successful Data Engineering Strategy

Data engineering is a dynamic field where the right choice of technologies and approaches can significantly increase the efficiency of working with data. A successful data engineering strategy requires careful selection of tools, process automation, and integration between different systems. In this section, we will look at the key steps that organizations can take to build a reliable and scalable data processing infrastructure.

How to Choose the Right Technology Stack for Data Processing

Choosing a data engineering technology stack depends on several key factors: data volume, processing frequency, complexity of ETL processes, and analytics needs. Organizations should consider the following aspects:

- Database types:

- Relational databases (SQL): PostgreSQL, MySQL, Microsoft SQL Server – suitable for transactional operations and structured data.

- NoSQL databases: MongoDB, Cassandra – good for storing unstructured data and highly scalable applications.

- Cloud solutions: Google BigQuery, Amazon Redshift, Snowflake – designed for high-performance data warehouses.

- ETL and data orchestration:

- Apache Airflow – for planning and automating ETL processes.

- dbt (Data Build Tool) – for transformation and management of analytical data.

- Talend and Fivetran – data extraction and integration tools.

- Programming and analysis:

- Python – the most widely used data engineering language with libraries like Pandas, NumPy, and SQLAlchemy.

- Spark and Hadoop – suitable for processing large data sets in distributed environments.

- Business Intelligence (BI) and Analysis:

- Power BI, Tableau, Looker – for visualization and report generation.

When choosing a stack, it is important to evaluate the scalability of the solutions and integration with existing platforms.

Automation and Optimization of ETL Processes

ETL (Extract, Transform, Load) processes are the backbone of data engineering, and their automation significantly increases the efficiency and reliability of data. The following steps are key to optimizing ETL processes:

- Using orchestration tools:

- Apache Airflow for automatically running ETL jobs.

- Prefect for managing ETL processes in cloud environments.

- Optimizing transformations:

- Using incremental transformations, which process only new or changed data, instead of the entire set.

- Implementation of parallel processingto reduce the execution time of ETL processes.

- Monitoring and observation of processes:

- Logging errors and events using tools like ELK Stack (Elasticsearch, Logstash, Kibana).

- Set up notifications for failed tasks via Slack, PagerDuty, or email integrations.

- Using cloud solutions:

- Amazon Glue, Google Dataflow, and Azure Data Factory to automate ETL in scalable cloud environments.

Example: A company can automate customer transaction processing by extracting data from a SQL database, transforming it with Apache Spark, and storing it in a cloud data warehouse, such as Snowflake or Google BigQuery.

Integrating Various Tools for More Efficient Data Management

While individual tools offer powerful capabilities, true effectiveness comes from integrating them into a unified environment. Key strategies for integrating data engineering technologies include:

- Connecting ETL processes to data warehouses:

- Combining BI tools with data platforms:

- Integration between Tableau and Google BigQuery to create visual reports on real business data.

- Microsoft Power BI can extract data directly from Azure Data Lake or SQL Server for interactive analytics.

- Applying DevOps and CI/CD to Data Engineering:

- Automate deployments with Terraform or Kubernetes.

- Using GitHub Actions or Jenkins to automatically test ETL scripts.

- Ensuring security and access management:

- Setting up role-based access models (RBAC), which control who can read, modify, or delete data.

- Using Data Catalogs (like Alation or Collibra) for centralized data management.

Example: A global company uses Apache Airflow to coordinate ETL processes, Google BigQuery as a central data warehouse, and Looker for interactive visualizations. All of these components are connected so that business analysts receive up-to-date data in real time.

Planning and executing a successful data engineering strategy requires an integrated approach that combines the right technologies, automation, and effective governance. Choosing the right technology stack, optimizing ETL processes, and seamless integration between different tools are essential for building a reliable and scalable data infrastructure.

In the next part, we will summarize the key concepts covered in the article and highlight how organizations can use data engineering to optimize their business processes.

Conclusion

In a world driven by information, data engineering plays a key role in the efficient use of corporate resources and making strategic decisions. In this article, we examined the basic concepts related to data processing, including the use of SQL for working with databases, Python for automating ETL processes, Apache Airflow for orchestration, as well as building and managing data warehouses. In addition to the technical aspects, we also emphasized the importance of good practices in data management (Data Governance), which ensure their security, accuracy, and accessibility.

Companies that invest in the right data management technologies and strategies build a solid foundation for sustainable growth and innovation. Regardless of the size of the business, implementing best practices such as process automation, integrating BI tools, and implementing information protection policies leads to more effective decision-making and competitive advantage. Data engineering is not just a technology trend – it is a significant factor in the digital transformation of organizations.

The Ruse Chamber of Commerce and Industry strives to be a reliable partner for business, providing access to training, practical resources and expert guidance in the field of data and technology. With the contemporary challenges that companies face, the Chamber assists organizations in building competencies that will help them adapt to new realities and use data as a strategic asset.

Using data engineering is not just a matter of technology – it is a mindset that helps companies build sustainable and efficient processes. It is time for organizations to take advantage of the opportunities that modern technologies offer to optimize their operations and build their future on a solid foundation of data and analytics.

Note: The publication was prepared with the help of generative artificial intelligence, which assisted in structuring and formulating the content. The final text is the result of the author's expert contribution, which guarantees its accuracy and practical focus.